By: ilma hasan

April 6 2021

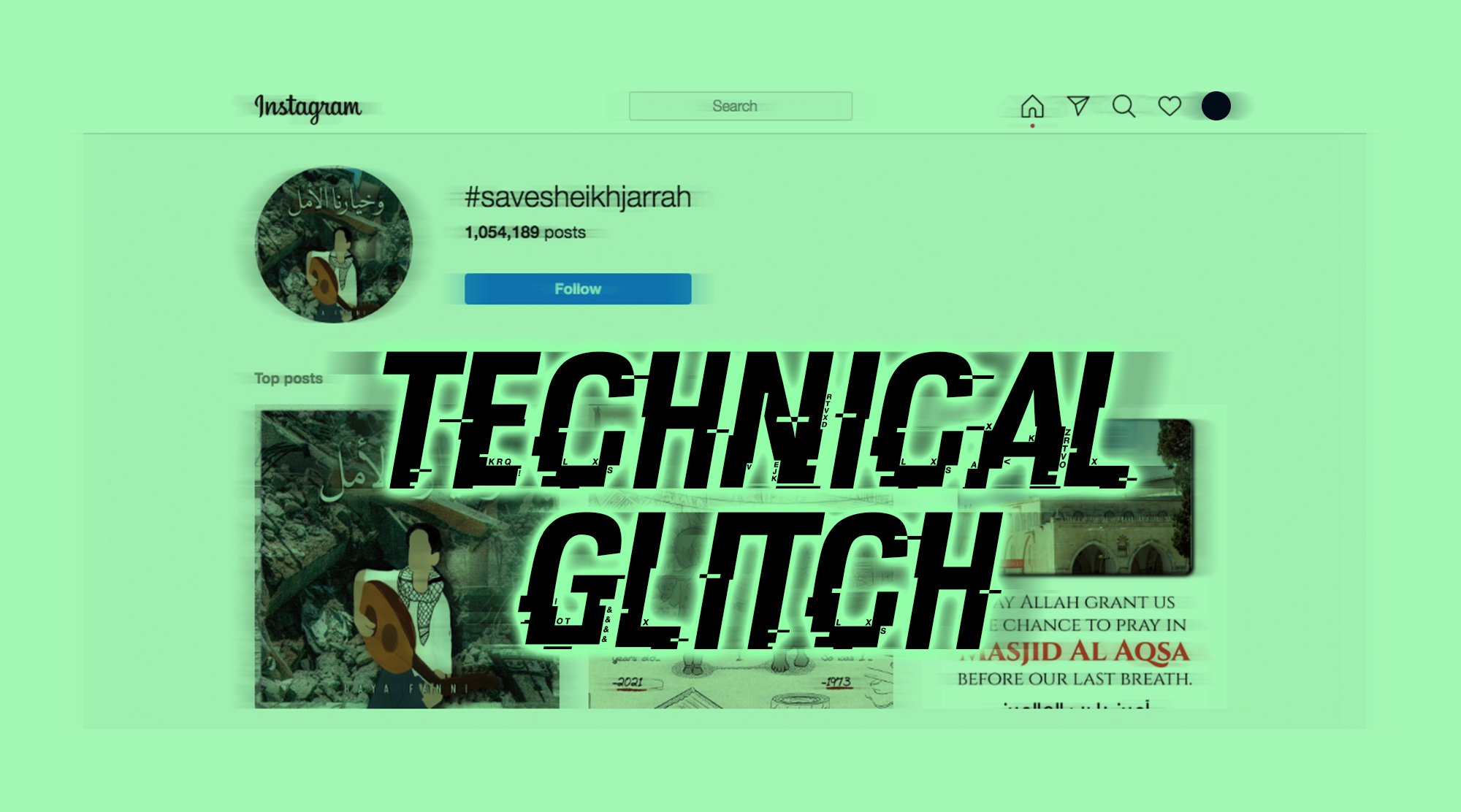

Double Check: Why are marginalized users most affected by 'technical glitches'?

In the first week of May, activists from various countries complained posts meant to amplify their causes had been removed by Instagram. For some first-timers in India using their social media accounts to help people procure urgent medicines and oxygen cylinders for families struggling with COVID-19 amid a calamitous second wave, it was a dangerous one-off incident that could potentially risk several lives. For others like Indigenous activist Sutton King and her colleagues in New York, it was yet another instance of their community being silenced by social media companies.

On May 6, Instagram and Twitter millions of users globally complained that their posts, photos, or videos had been removed. Some claimed their accounts were blocked. In response to the backlash, Instagram said in a statement that an automated update caused content re-shared by multiple users to appear as missing, affecting posts in several countries including that of Sheikh Jarrah, Colombia, U.S., and Canadian indigenous communities.

“People around the world who were impacted by the bug, including many in our Palestinian community, saw their Stories that were re-sharing posts disappear, as well as their Archive and Highlights Stories,” a Facebook spokesperson told IOL Tech. “The bug wasn’t related to the content itself, but was rather a widespread technical issue.”

In a series of tweets, Instagram’s head Adam Mosseri shared that the bug was a widespread issue affecting millions of posts and the move did not target specific content or hashtags.

A standard response

Although many posts were restored, the company’s defense of “technical glitch” came into question. A response repeatedly used by tech companies when takedowns have affected marginalized communities.

For Divij Joshi, a lawyer and tech researcher, a technical glitch is not a satisfactory response, and only feeds into a known drawback in Facebook’s operations: a lack of transparency and accountability. “They can keep saying it’s a glitch, that’s what Facebook said when posts using #ResignModi were removed, but we don’t really know that. In many cases, it’s not a matter of technical glitch, but a matter of governance and policy,” Joshi told Logically, referring to an incident when Facebook censored criticism of Indian Prime Minister Narendra Modi’s handling of the pandemic. The platform later claimed it temporarily blocked the hashtag by mistake “not because the Indian government asked us to.” The posts remained hidden in India for about three hours, but after the incident came to light, Facebook allowed these posts to be visible again in India, backtracking on its previous decision related to community guideline violations. Joshi claims people aren’t being given a sound justification; also citing systematic removal of posts about Kashmir as another case when the platform has fallen short.

In 2016, Facebook denied censorship over the removal of Lavish Reynolds’ livestream of her partner being shot by a Minnesota police officer, citing a technical glitch.

Mona Shtaya, the Local Advocacy Manager at Palestinian digital rights organization 7amleh, agrees. According to Shtaya, Palestinians, Kashmiris, Western Saharans and others from the global South are the ones affected by takedowns later characterized as technical bugs.

Alleged technical issues have disproportionately affected marginalized communities over the past few years, and the incidents are not limited to the global South. In 2016, Facebook denied rumors of censorship over the removal of Lavish Reynolds’ livestream of her partner being shot by a Minnesota police officer, once again citing a technical glitch for the takedown. The video had one million views.

In November 2019, the platform blamed a technical glitch for the mass removal of posts including that of Storm.mg, an online Chinese language media outlet based in Taiwan, which made the organization unable to publish reports on the social media platform. Other publications also perceived to be supportive of the opposing pan-Green coalition including Mirror Media, Up Media and Newstalk were reportedly unable to post on the platform.

Around the same time, a leading digital rights organization accused Facebook of actively blocking its users from sharing a link to a web page critical of Amazon. Facebook users who attempted to share the link received a message that the page's content went against Facebook’s community standards. “Whether this was intentional censorship, some technical glitch, or just a mistake made by an overworked human or robot, the end result is the same,” Fight for the Future’s deputy director Evan Greer told Gizmodo. “The campaign page that we made to give people a voice in their democracy is being blocked by a Silicon Valley giant.

Experts say that claiming an unspecified technical glitch is behind these takedowns not only fails to provide a proper justification for such incidents, it is also a means of misleading people.

“Corporations use such terms to distance themselves from the outcome. It’s a cloudy response because the user will assume it was not something designed to happen as though the algorithm has an agency or a mind of its own that it decided to glitch,” Smriti Bashira, a fellow at CyberBRICS Project, an organization analyzing digital policies in the BRICS countries told Logically. “It’s a result of what was coded to the system, or that the system was not efficiently managed. It is the corporation's responsibility to ensure it doesn’t happen.”

Are things changing?

Most experts claim the reason that takedowns affect certain communities is two-fold: high-level policy designed by institutional agendas and the discriminatory ways in which a platform's algorithms are designed by virtue of lack of representation.

“They’re not made keeping in mind the needs of marginalized people, they’re designed to reproduce normativity, keeping in mind the ideal user. Essentially someone who responds to virality,” according to Joshi. This does not only reflect in the nature of posts that are removed but also trickles down to the sort of content that is amplified or the kind of advertisements a user sees. For instance, women and members of minority communities are more likely to see ads of lower-paying jobs. This systematic discrimination is based on historical data that is taken to design the algorithms and then used to define a pattern of expectation or behavior of users in the future. According to Joshi, because that data is biased along the lines of race, class, caste it is a major source of unjust user-end experiences.

For the first time, countries will force tech companies to disclose the inner-workings of their algorithms.

Whether calls for higher accountability from users around the world is affecting change in platform governance is hard to assess since they function with total opaqueness. Experts monitoring Facebook and digital policies say nobody knows what goes into coding the algorithm itself. And even if their public responses are claiming of doing better, there is little insight to know whether it translates in the backend.

There is some work being done to improve transparency in the way these tech giants work. Amid growing criticism that machine-learning tools have promoted viral hateful or false content, it was reported last week that the European Commission is expected to demand that Facebook, Google, and Twitter alter their algorithms to stop the spread of online falsehoods. It's the first time any country or region will go as far as forcing tech companies to disclose the inner workings of the algorithms used to populate social media feeds.

Bashir believes it’s a positive step since these platforms “act as gatekeepers of speech exercising a tremendous amount of control.” However, these policies are still considered eurocentric with few measures for diverse groups.

“There’s a lot of work being done towards generating meaningful transparency to make platforms comply with international standards,” according to Joshi. “However, they are western concepts which need to be responsive to different contexts around the world. These are users who are the largest in numbers but it honestly seems like they simply don’t care.”