By: iryna hnatiuk

November 10 2023

Generative AI and misinformation on the Israel-Hamas digital frontline

Source: Matthew Hunter/Habboub Ramez/ABACA via Reuters Connect

The current Israel-Hamas crisis, which began on October 7, has taken on a new front: that of the digital battleground. The fog of war has shrouded social media, notably X (formerly Twitter), with its community notes program worsening the problem. This fog has even cast its shadow over more established media outlets like The New York Times, which issued an editorial note following its coverage of the October 17 hospital explosion.

This digital battleground has mostly played out through misattributed content, and the role of artificial intelligence (AI) has not been as significant as previously expected, despite the conflict now entering its second month. However, this has revealed more intricate issues in the use of generative AI amid a conflict.

Mission: Gain support and sympathy

AI-generated images primarily serve to bolster support for one side of a conflict. In the context of Israel-Hamas, AI-generated content was present as a concert hall adorned in Israel's national flag colors, images of Ronaldo holding the Palestinian flag, and protests and rallies: all determined by Logically Facts to be AI.

Images with strong emotional emphasis have also been spotlighted. Among them is a picture of a child under the rubble. This photo doesn't create a new narrative but rather reinforces an existing one — that the Gaza Strip was becoming "a graveyard for children," according to United Nations Secretary-General Antonio Guterres. Their usage is often symbolic and, quite likely, intentional.

AI-generated picture of a child under the rubble. Source: X//AyaIsleemEn

Mission: Muddy the water

Some AI images are simple to refute. For example, this photo of a supposed Israeli refugee camp, debunked by Logically Facts: the distorted flags carrying two Stars of David and the somewhat wonky people make it clear this is not a real image.

AI-generated image of a non-existent Israeli refugee camp. Source: X/Logically Facts

However, a photo of a supposed body of a child, posted on X by Israeli Prime Minister Benjamin Netanyahu, divided opinion as to whether it was AI or not.

Screenshots from AI or Not, a platform used to identify AI images, claimed the photo was generated by AI. Following this, a post on X claimed, “It took me 5 seconds to make this. Not hard to make a convincing fake photo anymore.”

Not everyone believed it was AI. Hany Farid, a Berkley University professor who specializes in the analysis and detection of digitally manipulated images, explained to independent media company 404 Media that the application was mistaken and the photo posted by the Israeli PM was genuine.

According to 404 Media, the image also turned up on 4chan, with a puppy replacing the corpse in the image and claiming it was the original article.

Picture of a body of a child, published by Israeli Prime Minister Benjamin Netanyahu and the picture of a puppy, published in 4chain and claimed to be the original photo. Sources: X/4chan/Screenshot)

Along with his team, Farid examined both images using their verification methods, paying attention to lines, shades, and light. They concluded that the photo featuring the puppy was, in fact, generated. The team arrived at the same result by passing the photo through their own verification tool trained on real and artificial images.

In a post on X, AI or Not stated, “We have confirmed with our system that the result is inconclusive because of the fact that the photograph was compressed and altered to blur out the name tag.”

Investigative outlet Bellingcat tested AI or Not in September 2023 and found that it struggled when identifying compressed AI images, particularly those that were highly photorealistic.

Denis Teyssou, the head of AFP’s Medialab and the innovation manager of Vera.ai, a project focused on detecting AI-generated images, shared a similar sentiment. Vera.ai did not identify any doctoring in the image of the child's body. However, Teyssou mentioned the limitations of such software and the significant risk that lies in the potential for false positives.

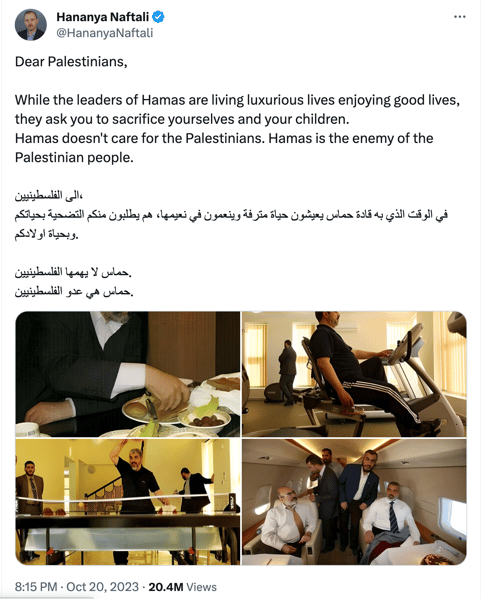

On the other hand, another instance concerns a photo that social media users instantly dismissed as AI. In the photo, Hamas leaders are shown leading "luxurious lives." These images gained widespread attention and were promptly dismissed as AI-generated. Vigilant internet users noted very obvious characteristics suggesting they were created using artificial intelligence software, such as fingers and blurred faces.

Publication on X with pictures of Hamas leaders leading "luxurious lives." Source: X/HananyaNaftali

But the images turned out to be real. They were made in 2014 and had undergone “upscaling” to increase their resolution. It is unclear whether the upscaling was an attempt to improve the picture quality or to suggest these were fake pictures. It is also challenging to predict how AI identifiers will respond to photos that were not initially generated but processed (“upscaled”) by AI-based applications.

Mission: Sow doubts and skepticism

The growing influence of generative AI has facilitated various forms of media manipulation. Just as users may presume that AI-generated content is real, the mere presence of such content can sow doubts about the authenticity of any video, image, or text, opening another door for bad actors. This is often referred to as the "liar’s dividend."

Social media users are handed the opportunity to dismiss content as AI-generated, denying facts they don’t like or that contradict their beliefs. Given the high quality of AI-generated images, verifying them can take time. While it’s being done, emotionally charged content spreads with an incredible speed, regardless of its veracity.

Cognitive psychologist Stephan Lewandowsky posits that people engage with provocative information, which incites outrage. This makes it more likely for such images to go viral. He also states that “misinformation can often continue to influence people’s thinking even after they receive a correction and accept it as true. This persistence is known as the continued influence effect.”

What about talking heads?

While creating images or videos can be easy, the realm of deepfakes presents greater challenges. Hands, hair, skin details, or clothing often appear unrealistic, blurry, or "too good to be true." For example, a deepfake featuring Biden announcing military intervention immediately raises doubts about its authenticity.

It is worth noting that apocalyptic concerns about the role of AI in armed conflicts have not materialized in the information war between Russia and Ukraine. A Zelenskyy deepfake, where he implores soldiers to lay down their arms, was of notably poor quality, riddled with obvious proportion issues.

A recent paper from the Harvard Kennedy School Misinformation Review labeled concerns about the role of generative AI in the worldwide spread of false information as "overblown." The same review posits that generated materials are used to reinforce existing conspiracy theories, biases, and narratives.

The authors also highlight that although generating increasingly high-quality and realistic images is possible, the more common practice is to manipulate genuine photos and videos. Dealing with generated images or deepfakes is less cumbersome than tackling manipulated real materials. An earlier Logically Facts analysis on AI-generated images also pointed this out but observed that AI has the means to empower people who may not have previously had the technical know-how to manipulate or doctor an image.

Can we rely solely on technology?

Regarding AI classifiers, Hany Farid offers the following perspective: "Classifiers are a valuable part of the toolkit, but they are just part of the toolkit. When evaluating these things, there should be a series of tests."

AI or Not may produce inaccurate results, according to its developer Optic. Vera.ai detects images generated by AI with approximately 77 percent accuracy. Aiornot.com can debunk claims very fast but is not always entirely accurate. There is always a risk of false positives, and these tools can not provide absolute proof that content is or is not AI-generated. No AI generation has a 100 percent accuracy rate – yet.

This knowledge may be overlooked when it comes to confirming a person’s feelings, beliefs, or biases. Valerie Wirtschafter, a fellow in Foreign Policy and the Artificial Intelligence and Emerging Technology Initiative, agrees that confirmation bias plays a significant role. She notes that people tend to seek out information that validates their beliefs while discounting information that contradicts them. She calls it a form of motivated reasoning and highlights that this is particularly prevalent during the ongoing crisis.

Platforms are now under pressure to label AI-generated content. While steps are being taken to address the issue, the details remain unclear. For instance, if someone on TikTok overlays real audio on an image or video that has been manipulated, or vice versa, how would this be labeled? Labeling the entire video as AI-generated could be misleading for this technology learning process.

Bottom Line

In the context of the Israel-Hamas conflict, the possibility of using AI has proven to be more pervasive and influential than the AI-generated imagery itself. It empowers online users to reject established facts and distorts perception, but the same users are generally quick to spot and shun AI imagery.

Plenty of existing war footage from previous Middle East conflicts continues to inundate social media, touted as current, along with visuals from other conflicts. The real pictures from the current war are shocking enough: there is no need to create new ones.

AI's more substantial concern could lie in its role within election processes, where it can be employed as a tool to shape public opinion. Recent elections in Slovakia witnessed the use of AI, with deepfakes of politicians frequently going viral. In the U.S., an image depicting an explosion near the Pentagon was swiftly identified as AI-generated, yet it profoundly impacted the stock market. Finally, an AI-generated Pope in Balenciaga demonstrated that even imperfect technologies can deceive tens of thousands of people.